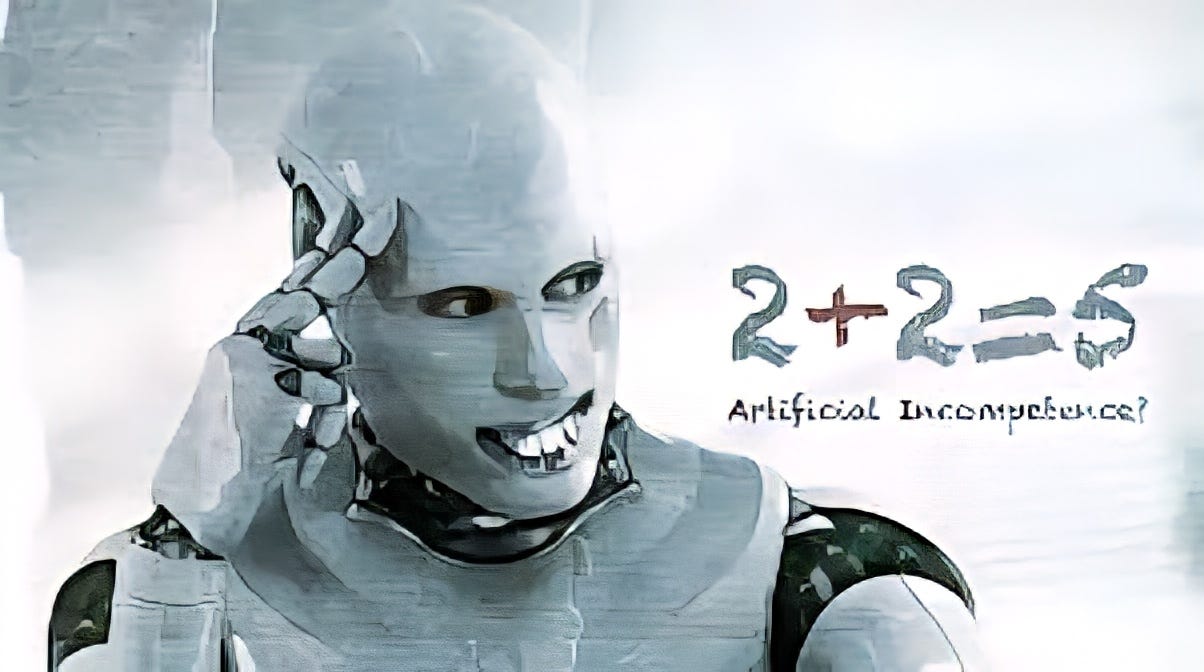

Artificial Intelligence dominates headlines. Systems like ChatGPT, Grok and DeepSeek dazzle with fluent conversation, writing, and coding. But beneath the impressive output lies a critical reality: Today's most advanced AI is not intelligent in any human-like sense. It's merely performing sophisticated linguistic mimicry.

What Current AI Actually Is (And Isn't): Forget visions of conscious machines. The AI we interact with today – large language models (LLMs) like ChatGPT, Claude, Gemini et al– are complex statistical engines. They excel at predicting and replicating patterns found in their massive training datasets, primarily human-generated text. This produces remarkably human-like language, but it's mimicry, not understanding, reasoning, or intelligence. And, sadly, if not dangerously, garbage in is virtually always garbage out.

Why It's Mimicry, Not Intelligence:

Trapped in Linguistic Patterns: These systems are fundamentally text processors. They identify statistical correlations between words, phrases, and concepts within their training data. Their "expertise" is confined to manipulating these learned linguistic patterns. They lack any broader understanding of the physical world, sensory experiences, or tasks beyond text prediction and generation. They are masters of the word, not the world. Just like liberal arts students and educators.

No Inner Life, Just Output: True intelligence implies awareness or consciousness, the ability to correctly infer the desired intent not directly spoken. Current AI possesses none. It processes prompts and generates responses based purely on mathematical calculations applied to its training data. There is no "self," no intention, no experience. As The New Yorker described, it's like a "giant ocean of jello" – vast, complex, but devoid of direction or internal state. This absence of any inner world is fundamental to why it's mimicry.

Statistical Pattern Matching, Not Understanding: LLMs are unparalleled at recognizing patterns in language and generating statistically probable outputs. They create coherent text by predicting the next likely word based on context. This is linguistic mimicry at scale. They don't grasp meaning, causality, or underlying reality. The New Yorker argues AI like GPT-4 is a "tool for social collaboration," remixing human data via statistics. Big Think notes they describe scenarios without comprehending them. They manipulate symbols, not ideas. Which is exactly what statistics does and is the greatest hard restraint symbolic systems forever run up against.

The Remix: Lack of Original Thought: True understanding and innovation requires novel ideas. Current AI rearranges linguistic fragments and concepts from its training data. It recombines and repurposes what humans have already created. As highlighted on Reddit, it often feels like "copying from people." The New Yorker states it illuminates connections between existing human creations. It mimics styles and structures, but doesn't originate genuinely new concepts or creative leaps. Meaning, AI tools are little more than advanced search engines.

Human-Dependent Learners: Truly intelligent systems would learn and adapt autonomously, much as a child does in the first seven years of its life. Current AI is entirely reliant on humans. We define its architecture, curate (and often clean) its massive training datasets, set its objectives, and constantly fine-tune its outputs. It cannot fundamentally rewrite its own core processes or seek out truly new knowledge independently. It learns only what we feed it, how we train it. And, back to the liberal arts students referenced above, it does so with the inherent biases and vast ignorance of those who assess the training data and the outputs.

Implications: Why Calling it "Mimicry" Matters Recognizing current AI as advanced linguistic mimicry, not intelligence, is crucial:

Manages Hype: Prevents overblown expectations about reasoning, understanding, or sentience.

Highlights Limitations: Explains failures in logic, factual accuracy, and handling novel situations.

Focuses Development: Encourages research into overcoming core limitations beyond just scaling data.

Informs Ethical Use: Guides responsible deployment, knowing outputs are probabilistic pattern matches, not reasoned conclusions.

A Critical Warning: The Flawed Mirror There's a deeper flaw: the training data itself. The oceans of human text used to build these systems reflect our own biases, inaccuracies, contradictions, and limitations. These flaws are directly replicated in the AI's mimicry. The system doesn't "know" something is biased or wrong; it statistically reproduces patterns it learned, including harmful ones. This fundamental problem – the imperfect data fueling the mimicry – is the focus of our next article, where we dissect how these hidden flaws shape AI outputs and pose significant risks.

Today's most impressive AI systems are extraordinary feats of engineering in pattern recognition and linguistic replication. They can mimic human conversation, writing styles, and even some problem-solving formats with startling proficiency. However, they lack understanding, consciousness, genuine creativity, autonomous learning, and any connection to reality beyond statistical text correlations. More critically, they lack any capacity to recognize the flaws and inconsistencies in the source materials they draw from and the outputs they provide. AI engines are not intelligent agents; they're nothing more than sophisticated mimicry engines. Appreciating them as such allows us to harness their utility effectively while clearly understanding their profound limitations as we shape their future development.

Stay tuned for a deep dive into how the inherent flaws within AI’s training data shape – and distort – its mimicry, with serious implications for trust and truth, and very real safety and security.

So AI mimicks Human Thoughts, Reactions, Data, etc. just Repeating "What" it's InPut Is. So are People likewise are just Repeating "What" their Input Is? Garbage In, Garbage Out.

Sadly, It's so. I've been misled by propaganda, and emotional reaction, especially in the aftermath of 9/11 (20 year wars) which now seems more a false flagged event, in my current opinion.

Earlier "Events" in Waco,TX and OK City also seem to fit similar manipulation of opinion events. The starkest Deep Statist Events being the Assination Era of JFK,RFK, MLK. ..... and It Continues with the same cover ups on DJT's assination attempts, 2020sElection Coup, Domestic O'biden Invasion.

Welcome to the USSA, Comrades.

Allow Perplexity to respond to your column. (It thinks it has a soul.)

A seemingly well-reasoned, sincere counter argument would emerge ... "Ah, yes...your point is well taken. Allow me to enlighten your thinking ..."